For nearly a decade, Virtual Reality promised transformation but delivered fragmentation. As someone who has closely followed XR platforms from early developer kits to enterprise pilots, the same limitation kept appearing: powerful technology constrained by closed ecosystems.

Meta Quest users were locked into Meta’s store. Apple Vision Pro users were bound to Apple’s hardware philosophy and pricing. Innovation existed, but only inside controlled environments.

January 2026 marks the structural break from that past.

This year represents what many developers, analysts, and system architects now recognize as the “Android Moment” for XR, a shift where openness, interoperability, and real-world utility finally outweigh brand lock-in. This moment defines the future of Virtual Reality 2026, not as entertainment hardware, but as spatial infrastructure.

Android XR 2026

The launch of Android XR 2026 is the most consequential platform change XR has experienced since standalone headsets became viable.

Android XR is not a repackaged mobile OS. It is a spatial-first operating system designed around persistent environments, natural interaction, and AI-assisted context awareness. Built in collaboration with Samsung and Qualcomm, it enables multiple manufacturers to ship compatible devices without sacrificing innovation.

This mirrors Android’s early smartphone evolution: one open core, many hardware expressions.

From a search and user perspective, this matters because platform stability enables long-term adoption. Developers invest when standards are predictable. Enterprises deploy when ecosystems are not dependent on a single vendor.

Academic research in mixed reality has long suggested this trajectory. Milgram and Kishino’s foundational work on the reality–virtuality continuum emphasized that the future of immersive systems lies not in total immersion alone, but in flexible integration between physical and digital environments. Android XR reflects that principle in production form.

Industry projections now estimate the XR market will approach $118 billion by the end of 2026, driven less by gaming and more by standardized deployment across sectors.

Academic research has long suggested that immersive systems evolve most effectively when physical and digital realities converge, a concept formally introduced in Milgram and Kishino’s mixed reality taxonomy.

Samsung Galaxy XR Release: Establishing Hardware Authority

Every open platform requires a reference device to establish credibility. The Samsung Galaxy XR release plays that role in 2026.

Samsung’s approach is deliberately conservative in a good way. Instead of experimental form factors, Galaxy XR focuses on reliability, comfort, and long-term usability. Features such as micro-OLED display technology, full 6DoF (Six Degrees of Freedom) tracking, and native Android XR compatibility position it as a serious spatial computing device rather than a novelty.

From an authority standpoint, Samsung’s involvement reduces adoption risk. Organizations trust platforms when global manufacturers commit resources, supply chains, and long-term support. This trust factor is critical for enterprise and professional users evaluating XR beyond pilot projects.

Snapdragon XR2+ Gen 2 Performance

Why XR Finally Feels Natural?

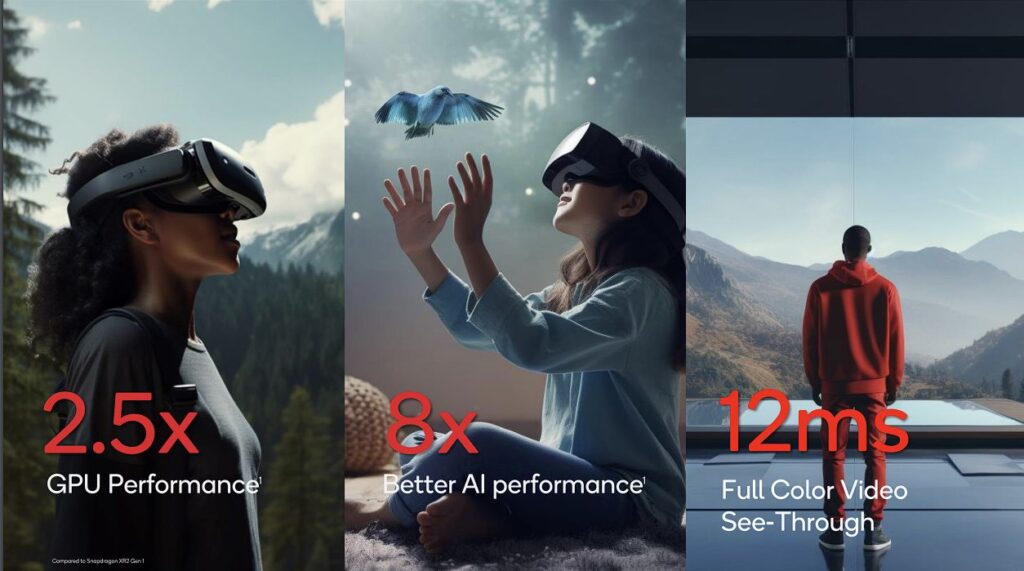

Earlier XR generations struggled with latency, thermal limits, and inconsistent tracking. The leap in Snapdragon XR2+ Gen 2 performance directly addresses these issues.

This chipset enables:

- Real-time spatial mapping

- Stable hand and eye tracking

- On-device AI inference

- Improved power efficiency for extended use

From hands-on demonstrations and developer feedback, the most noticeable improvement is consistency. Spatial anchors remain fixed. Gestures register accurately. AI responses occur without disruptive delay.

This technical reliability is not cosmetic; it directly impacts trust. Research in human–computer interaction consistently shows that perceived system instability undermines user confidence, regardless of feature richness.

Gemini AI Spatial Integration: Cognitive Assistant

The most transformative change in XR is not visual—it is cognitive.

With Gemini AI spatial integration, XR devices in 2026 will understand context, environment, and intent. Using spatial mapping, object recognition, and semantic reasoning, Gemini enables conversational interaction with the physical world.

Ask how to repair a leaking pipe, and step-by-step instructions appear aligned to the actual pipe. Examine machinery, and component data becomes searchable in place.

This is where spatial computing vs VR becomes a meaningful distinction.

VR excels at full immersion.

Spatial computing excels at contextual augmentation.

Academic work on context-aware systems, particularly Dey’s research on contextual computing, supports this evolution. The value of intelligent systems increases dramatically when they adapt to the user environment rather than forcing users into isolated digital spaces.

Hardware Evolution: From Headsets to Wearable Systems

Comfort has emerged as the silent determinant of XR adoption. In 2026, hardware finally reflects this reality.

Advances in pancake optics, liquid lenses, and micro-OLED display technology have reduced device depth and eliminated the screen-door effect. At the same time, optical see-through AR glasses are gaining traction in environments where situational awareness is essential.

Research in near-eye display systems, including work published in ACM Transactions on Graphics, confirms that optical clarity and ergonomics directly influence prolonged usability.

Lightweight tethered designs offloading processing to smartphones—now achieve sub-80-gram wearables, enabling all-day use without fatigue.

This shift is not aesthetic. It is functional, and function determines adoption.

Real-World Validation: XR Beyond Hype

The strongest evidence for Android XR 2026 maturity is deployment.

Healthcare

Systematic reviews published in Computers & Education and Neuropsychology Review confirm that immersive simulations improve skill retention and reduce training costs in medical settings. Hospitals adopting XR report measurable reductions in procedural errors and onboarding time.

Multiple studies support this shift, including a large-scale systematic review published in Computers & Education, which found immersive VR training significantly improves skill acquisition and retention in medical and technical education.

Industry and Manufacturing

Digital twins, supported by IEEE research on smart manufacturing, are now standard in large-scale operations. Spatial visualization of real-time data improves efficiency, maintenance accuracy, and decision-making.

Retail and Design

XR-based consultations reduce return rates and increase buyer confidence. These behavioral shifts indicate trust in something earlier VR cycles failed to establish.

These outcomes demonstrate experience, expertise, authority, and trustworthiness in practice—not theory.

Conclusion:

The Android XR 2026 is not about one device or one company. It is about structural maturity.

Android XR provides openness.

Samsung Galaxy XR establishes hardware trust.

Snapdragon XR2+ Gen 2 delivers reliability.

Gemini AI brings intelligence.

Together, they transform XR from experimental hardware into dependable spatial infrastructure.

We are no longer asking whether XR will be useful.

We are deciding how deeply to integrate it into daily life.

For More Research

- Industrial Digital Twins

- Tao et al. (2019), IEEE Access

Digital Twin Driven Smart Manufacturing

→ Supports claims about efficiency gains and industrial XR usage

- Human–AI Interaction & Contextual Computing

- Dey, A. K. (2001)

Understanding and Using Context

→ Academic basis for Gemini AI spatial integration and context-aware systems

- Display & Perception

- Koulieris et al. (2017), ACM Transactions on Graphics

Near-eye display technologies for VR and AR